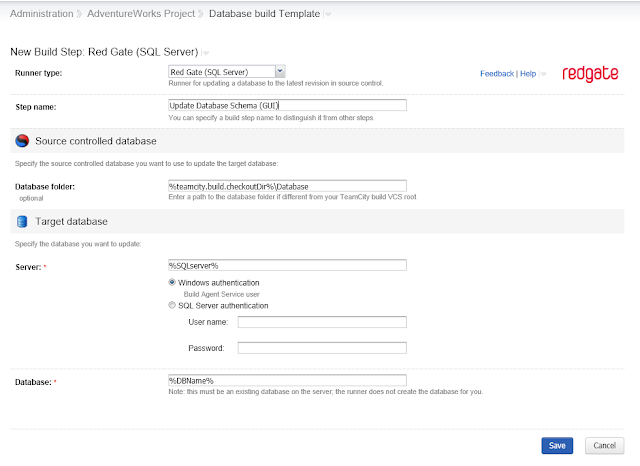

This new assert routine, AssertLike, solves one of the more common situations which I've had to work around - that where you know roughly the message you are checking for, but not the totality of it. Let's take the example of a stored procedure which gets all addresses for a given state ID. This is somewhat contrived - there are reasons why you wouldn't do exactly this in a real application, but it makes for an easy to follow example. I'm using the AdventureWorks 2012 database.

/*

Version : 1.0

Written on : 26/12/2012

Written by : Dave Green

Purpose of SP : To retrieve all address records in a given state.

*/

CREATE PROCEDURE dbo.usp_GetAddressesInState

@StateProvinceCode VARCHAR(100)

AS

DECLARE @StateProvinceID INT, @ErrorMessage VARCHAR(100)--Attempt to get province ID from StateProvince tableSELECT @StateProvinceID = StateProvinceID FROM Person.StateProvince WHERE StateProvinceCode = @StateProvinceCode

IF @StateProvinceID IS NULL --If the stateprovince wasn't valid, raise an errorBEGIN

SET @ErrorMessage = 'Unable to get StateProvinceID'

RAISERROR (@ErrorMessage,16,1)END

--Return customer recordsSELECT AddressID ,

AddressLine1 , AddressLine2 ,

City , StateProvinceID ,

PostalCode , SpatialLocation ,

ModifiedDateFROM Person.Address WHERE StateProvinceID = @StateProvinceIDGOThis Stored Proc checks that the Province ID exists before it returns the results; this means that no results mean that there are no results, rather than an invalid (or not on record) state. This sort of distinction is quite common for applications where you want to return a more helpful message to the user to allow them to take action.

We can test this SP using the following tSQLt code:

/*

Version : 1.0

Written on : 26/12/2012

Written by : Dave Green

Purpose of Class : Demo of AssertLike

*/

EXEC tSQLt.NewTestClass 'ALDemo'

GO

/*

Version : 1.0

Written on : 26/12/2012

Written by : Dave Green

Purpose : check that invalid States give an error

*/

CREATE PROCEDURE ALDemo.[test invalid State code produces error]

AS

/**********************************************

**** To verify that the SP throws an error **** when fed with an invalid state code

***********************************************/

--Assemble

--Act

-- Execute the code under testBEGIN TRY

EXEC dbo.usp_GetAddressesInState @StateProvinceCode = 'test' -- varchar(100)

--Assert EXEC tSQLt.Fail 'Expected error was not thrown'

END TRY

BEGIN CATCH DECLARE @Actual NVARCHAR(MAX) = ERROR_MESSAGE() EXEC tSQLt.AssertEqualsString @Expected = N'Unable to get StateProvinceID', -- nvarchar(max)

@Actual = @Actual, -- nvarchar(max)

@Message = 'The error thrown was different to that which was expected' -- nvarchar(max)

END CATCH

GO

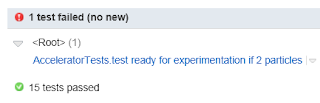

EXEC tSQLt.Run 'ALDemo' -- Run the testThis works successfully, and tests the proc, checking that the correct error message is thrown (i.e. there isn't another error which is causing the proc to fail first).

This is all well and good, but then a requirement for change comes that the error message shown to the caller should specify the erroneous value. This is easily accomplished in the SP:

/*

Written on : 26/12/2012

Written by : Dave Green

Purpose : Get address records for a given state.

*/

/* Updated by Dave Green 26/12/2012 to return any erroneous state in the error message.*/

ALTER PROCEDURE usp_GetAddressesInState

@StateProvinceCode VARCHAR(100)

AS

DECLARE @StateProvinceID INT, @ErrorMessage VARCHAR(100)--Attempt to get province ID from StateProvince tableSELECT @StateProvinceID = StateProvinceID FROM Person.StateProvince WHERE StateProvinceCode = @StateProvinceCode

IF @StateProvinceID IS NULL --If the stateprovince wasn't valid, raise an errorBEGIN

SET @ErrorMessage = 'Unable to get StateProvinceID - Province ' +@StateProvinceCode+' may be invalid.'

RAISERROR (@ErrorMessage,16,1)END --Return customer recordsSELECT AddressID ,

AddressLine1 , AddressLine2 ,

City , StateProvinceID ,

PostalCode , SpatialLocation ,

ModifiedDateFROM Person.Address WHERE StateProvinceID = @StateProvinceIDGOHowever, how can we adjust the unit test to accommodate this? The error message will change each time the SP is called - to reflect the input parameter.

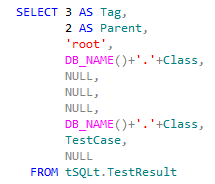

The answer has always been to put in place custom logic in the 'CATCH' block - 'If error message is like this, then fine, else fail'.

This is realised in code as:

BEGIN CATCH

DECLARE @Actual NVARCHAR(MAX) = ERROR_MESSAGE()

IF @Actual IS NULL OR @Actual NOT LIKE 'Unable to get StateProvinceID - Province % may be invalid.'

EXEC tSQLt.Fail @Message0 = 'The error thrown was different to that which was expected' END CATCHOf course, if you are checking a specific value, or date/time, this gets more complicated. A line number in the error message makes life even more unpredictable. This is in my view a little ugly, and doesn't lead itself to easy reading in the same way that the simple assert did. The good news is that the latest version of tSQLt now has an AssertLike assert procedure, so we can now simplify this as:

BEGIN CATCH

DECLARE @Actual NVARCHAR(MAX) = ERROR_MESSAGE()

EXEC tSQLt.AssertLike @ExpectedPattern = N'Unable to get StateProvinceID - Province % may be invalid.', -- nvarchar(max)

@Actual = @Actual, -- nvarchar(max)

@Message = N'The error thrown was different to that which was expected' -- nvarchar(max)END CATCHThe new assert gives us a standardised approach which also includes a null check (on both sides). This allows a neater approach than custom logic for this basic type of check, and has particular applicability where the line number of an error is reported back as part of the message. This is because tests would then not break solely for a comment being added to the procedure, thus reducing the work required to 'fix' such tests when such a comment is added.

This seems to me to be much neater, and should be more a maintainable test for the future too, as it follows the same form as asserts in other tests.